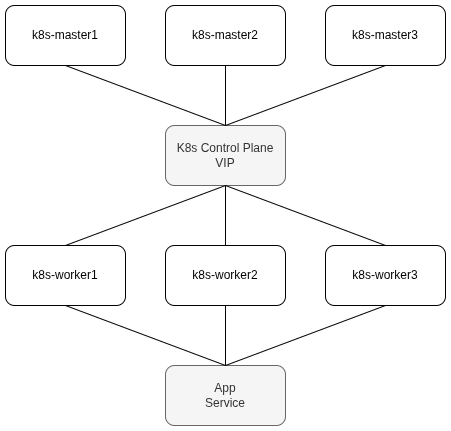

Deploy K8s HA (Multi-Master) on Ubuntu servers using Ansible

Ansible inventory file:

[masters]

k8s-master1 ansible_host=10.10.10.11 node_name=k8s-master1 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-master2 ansible_host=10.10.10.12 node_name=k8s-master2 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-master3 ansible_host=10.10.10.13 node_name=k8s-master3 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

[workers]

k8s-worker1 ansible_host=10.10.10.21 node_name=k8s-worker1 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-worker2 ansible_host=10.10.10.22 node_name=k8s-worker2 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-worker3 ansible_host=10.10.10.23 node_name=k8s-worker3 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-master1 ansible_host=10.10.10.11 node_name=k8s-master1 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-master2 ansible_host=10.10.10.12 node_name=k8s-master2 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-master3 ansible_host=10.10.10.13 node_name=k8s-master3 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

[workers]

k8s-worker1 ansible_host=10.10.10.21 node_name=k8s-worker1 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-worker2 ansible_host=10.10.10.22 node_name=k8s-worker2 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

k8s-worker3 ansible_host=10.10.10.23 node_name=k8s-worker3 ansible_user=root ansible_ssh_private_key_file=~/.ssh/id_ed25519

Ansible playbook:

---

- hosts: all

become: true

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

tasks:

- name: Disable swap

command: swapoff -a

- name: Ensure swap is disabled in fstab

replace:

path: /etc/fstab

regexp: '^(.+\s+swap\s+.+)$'

replace: '# \1'

- name: Load required kernel modules

modprobe:

name: '{{ item }}'

state: present

loop:

- overlay

- br_netfilter

- name: Set sysctl parameters for Kubernetes

sysctl:

name: '{{ item.name }}'

value: '{{ item.value }}'

state: present

reload: yes

loop:

- { name: net.bridge.bridge-nf-call-iptables, value: 1 }

- { name: net.bridge.bridge-nf-call-ip6tables, value: 1 }

- { name: net.ipv4.ip_forward, value: 1 }

- { name: net.ipv4.ip_nonlocal_bind, value: 1 }

- { name: net.ipv4.conf.all.arp_ignore, value: 1 }

- { name: net.ipv4.conf.all.arp_announce, value: 2 }

- name: Ensure /etc/containerd exists

file:

path: /etc/containerd

state: directory

mode: '0755'

- name: Install containerd

apt:

name: containerd

state: present

update_cache: true

- name: Configure containerd

command: containerd config default

register: containerd_config

changed_when: false

- name: Write containerd config

copy:

dest: /etc/containerd/config.toml

content: "{{ containerd_config.stdout }}"

#notify: restart containerd

- name: Enable systemd cgroup

replace:

path: /etc/containerd/config.toml

regexp: 'SystemdCgroup = false'

replace: 'SystemdCgroup = true'

#notify: restart containerd

- name: Restart containerd

systemd:

name: containerd

state: restarted

enabled: yes

- name: Install dependencies

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- gnupg

- lsb-release

state: present

update_cache: true

- name: Ensure apt keyrings directory exists

file:

path: /etc/apt/keyrings

state: directory

mode: '0755'

- name: Download Kubernetes repo key

get_url:

url: https://pkgs.k8s.io/core:/stable:/v1.35/deb/Release.key

dest: /etc/apt/keyrings/kubernetes-apt-keyring.gpg

mode: '0644'

force: yes

- name: Add Kubernetes apt repository

copy:

dest: /etc/apt/sources.list.d/kubernetes.list

content: |

deb [trusted=yes] https://pkgs.k8s.io/core:/stable:/v1.35/deb/ /

- name: Update apt cache

apt:

update_cache: yes

- name: Install kubeadm, kubelet, kubectl

apt:

name:

- kubeadm

- kubelet

- kubectl

state: present

update_cache: true

- hosts: masters

become: true

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

tasks:

- name: Install k9s

ignore_errors: yes

get_url:

url: https://github.com/derailed/k9s/releases/latest/download/k9s_Linux_amd64.tar.gz

dest: /tmp/k9s.tar.gz

mode: '0644'

- name: Extract k9s

ignore_errors: yes

unarchive:

src: /tmp/k9s.tar.gz

dest: /usr/local/bin

remote_src: yes

creates: /usr/local/bin/k9s

- name: Ensure k9s is executable

ignore_errors: yes

file:

path: /usr/local/bin/k9s

mode: '0755'

- name: Deploy kube-vip static pod (bootstrap mode)

#force: no

copy:

dest: /etc/kubernetes/manifests/kube-vip.yaml

content: |

apiVersion: v1

kind: Pod

metadata:

name: kube-vip

namespace: kube-system

spec:

containers:

- args:

- manager

env:

- name: vip_arp

value: "true"

- name: port

value: "6443"

- name: vip_nodename

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: vip_interface

value: {{ interface }}

- name: vip_subnet

value: "32"

- name: dns_mode

value: first

- name: cp_enable

value: "true"

- name: cp_namespace

value: kube-system

- name: svc_enable

value: "true"

- name: svc_leasename

value: plndr-svcs-lock

- name: address

value: {{ vip_address }}

- name: prometheus_server

value: :2112

image: ghcr.io/kube-vip/kube-vip:v1.0.1

imagePullPolicy: IfNotPresent

name: kube-vip

resources: {}

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

drop:

- ALL

volumeMounts:

- mountPath: /etc/kubernetes/admin.conf

name: kubeconfig

hostAliases:

- hostnames:

- kubernetes

ip: 127.0.0.1

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/admin.conf

name: kubeconfig

status: {}

- name: Initiate cluster using first master

hosts: masters[0]

become: true

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

tasks:

- name: Check if cluster is already initialized

stat:

path: /etc/kubernetes/admin.conf

register: kubeadm_admin_conf

- name: temp IP on master

become: yes

shell: |

ip addr show dev {{ interface }} | grep -q "{{ vip_address }}" || \

ip addr add {{ vip_address }}/24 dev {{ interface }}

args:

executable: /bin/bash

when: not kubeadm_admin_conf.stat.exists

- name: Initialize first master

command: >

kubeadm init

--control-plane-endpoint {{ vip_address }}:6443

--upload-certs

--pod-network-cidr 10.244.0.0/16

when: not kubeadm_admin_conf.stat.exists

register: kubeadm_init

- name: Copy admin.conf to user's kube config on first master

become: yes

shell: mkdir -p /root/.kube && cp /etc/kubernetes/admin.conf /root/.kube/config

args:

executable: /bin/bash

- name: Install Calico CNI

shell: |

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.2/manifests/calico.yaml --kubeconfig /etc/kubernetes/admin.conf

args:

creates: /etc/cni/net.d/10-calico.conflist

- name: Save join command

command: kubeadm token create --print-join-command

register: join_command

- name: Upload certificates and get certificate key

command: kubeadm init phase upload-certs --upload-certs

register: cert_key

- name: Generate control-plane join command

command: kubeadm token create --print-join-command --certificate-key {{ cert_key.stdout_lines[-1] }}

register: join_command_cp

- hosts: masters[1:]

become: true

tasks:

- name: Check if node already joined the cluster

stat:

path: /etc/kubernetes/kubelet.conf

register: kubelet_conf

- name: Join additional master nodes

command: "{{ hostvars[groups['masters'][0]]['join_command_cp'].stdout }}"

when: not kubelet_conf.stat.exists

- name: Copy admin.conf to user's kube config on other masters

become: yes

shell: mkdir -p /root/.kube && cp /etc/kubernetes/admin.conf /root/.kube/config

args:

executable: /bin/bash

- hosts: masters[0]

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

become: true

tasks:

- name: remove IP on first master

become: yes

shell: |

if ip addr show dev {{ interface }} | grep -q "{{ vip_address }}/24"; then

ip addr del {{ vip_address }}/24 dev {{ interface }}

fi

args:

executable: /bin/bash

- hosts: workers

become: true

tasks:

- name: Check if node already joined the cluster

stat:

path: /etc/kubernetes/kubelet.conf

register: kubelet_conf

- name: Join worker nodes

command: "{{ hostvars[groups['masters'][0]]['join_command'].stdout }}"

when: not kubelet_conf.stat.exists

- name: Create app-blue Deployment and Service manifest

hosts: masters[0]

become: true

tasks:

- name: Write app-blue.yaml

copy:

dest: /root/app-blue.yaml

content: |

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-blue

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: app-blue

template:

metadata:

labels:

app: app-blue

spec:

#affinity:

#podAntiAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# - labelSelector:

# matchExpressions:

# - key: app

# operator: In

# values:

# - app-blue

# topologyKey: "kubernetes.io/hostname"

containers:

- name: app-blue

image: openterprise/blue:latest

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 5000

---

kind: Service

apiVersion: v1

metadata:

name: svc-blue

namespace: default

spec:

selector:

app: app-blue

ports:

- name: svc-blue

protocol: TCP

port: 80

targetPort: 5000

nodePort: 30005

type: NodePort

- name: Install test app

shell: |

kubectl apply -f /root/app-blue.yaml --kubeconfig /etc/kubernetes/admin.conf

- hosts: all

become: true

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

tasks:

- name: Disable swap

command: swapoff -a

- name: Ensure swap is disabled in fstab

replace:

path: /etc/fstab

regexp: '^(.+\s+swap\s+.+)$'

replace: '# \1'

- name: Load required kernel modules

modprobe:

name: '{{ item }}'

state: present

loop:

- overlay

- br_netfilter

- name: Set sysctl parameters for Kubernetes

sysctl:

name: '{{ item.name }}'

value: '{{ item.value }}'

state: present

reload: yes

loop:

- { name: net.bridge.bridge-nf-call-iptables, value: 1 }

- { name: net.bridge.bridge-nf-call-ip6tables, value: 1 }

- { name: net.ipv4.ip_forward, value: 1 }

- { name: net.ipv4.ip_nonlocal_bind, value: 1 }

- { name: net.ipv4.conf.all.arp_ignore, value: 1 }

- { name: net.ipv4.conf.all.arp_announce, value: 2 }

- name: Ensure /etc/containerd exists

file:

path: /etc/containerd

state: directory

mode: '0755'

- name: Install containerd

apt:

name: containerd

state: present

update_cache: true

- name: Configure containerd

command: containerd config default

register: containerd_config

changed_when: false

- name: Write containerd config

copy:

dest: /etc/containerd/config.toml

content: "{{ containerd_config.stdout }}"

#notify: restart containerd

- name: Enable systemd cgroup

replace:

path: /etc/containerd/config.toml

regexp: 'SystemdCgroup = false'

replace: 'SystemdCgroup = true'

#notify: restart containerd

- name: Restart containerd

systemd:

name: containerd

state: restarted

enabled: yes

- name: Install dependencies

apt:

name:

- apt-transport-https

- ca-certificates

- curl

- gnupg

- lsb-release

state: present

update_cache: true

- name: Ensure apt keyrings directory exists

file:

path: /etc/apt/keyrings

state: directory

mode: '0755'

- name: Download Kubernetes repo key

get_url:

url: https://pkgs.k8s.io/core:/stable:/v1.35/deb/Release.key

dest: /etc/apt/keyrings/kubernetes-apt-keyring.gpg

mode: '0644'

force: yes

- name: Add Kubernetes apt repository

copy:

dest: /etc/apt/sources.list.d/kubernetes.list

content: |

deb [trusted=yes] https://pkgs.k8s.io/core:/stable:/v1.35/deb/ /

- name: Update apt cache

apt:

update_cache: yes

- name: Install kubeadm, kubelet, kubectl

apt:

name:

- kubeadm

- kubelet

- kubectl

state: present

update_cache: true

- hosts: masters

become: true

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

tasks:

- name: Install k9s

ignore_errors: yes

get_url:

url: https://github.com/derailed/k9s/releases/latest/download/k9s_Linux_amd64.tar.gz

dest: /tmp/k9s.tar.gz

mode: '0644'

- name: Extract k9s

ignore_errors: yes

unarchive:

src: /tmp/k9s.tar.gz

dest: /usr/local/bin

remote_src: yes

creates: /usr/local/bin/k9s

- name: Ensure k9s is executable

ignore_errors: yes

file:

path: /usr/local/bin/k9s

mode: '0755'

- name: Deploy kube-vip static pod (bootstrap mode)

#force: no

copy:

dest: /etc/kubernetes/manifests/kube-vip.yaml

content: |

apiVersion: v1

kind: Pod

metadata:

name: kube-vip

namespace: kube-system

spec:

containers:

- args:

- manager

env:

- name: vip_arp

value: "true"

- name: port

value: "6443"

- name: vip_nodename

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: vip_interface

value: {{ interface }}

- name: vip_subnet

value: "32"

- name: dns_mode

value: first

- name: cp_enable

value: "true"

- name: cp_namespace

value: kube-system

- name: svc_enable

value: "true"

- name: svc_leasename

value: plndr-svcs-lock

- name: address

value: {{ vip_address }}

- name: prometheus_server

value: :2112

image: ghcr.io/kube-vip/kube-vip:v1.0.1

imagePullPolicy: IfNotPresent

name: kube-vip

resources: {}

securityContext:

capabilities:

add:

- NET_ADMIN

- NET_RAW

drop:

- ALL

volumeMounts:

- mountPath: /etc/kubernetes/admin.conf

name: kubeconfig

hostAliases:

- hostnames:

- kubernetes

ip: 127.0.0.1

hostNetwork: true

volumes:

- hostPath:

path: /etc/kubernetes/admin.conf

name: kubeconfig

status: {}

- name: Initiate cluster using first master

hosts: masters[0]

become: true

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

tasks:

- name: Check if cluster is already initialized

stat:

path: /etc/kubernetes/admin.conf

register: kubeadm_admin_conf

- name: temp IP on master

become: yes

shell: |

ip addr show dev {{ interface }} | grep -q "{{ vip_address }}" || \

ip addr add {{ vip_address }}/24 dev {{ interface }}

args:

executable: /bin/bash

when: not kubeadm_admin_conf.stat.exists

- name: Initialize first master

command: >

kubeadm init

--control-plane-endpoint {{ vip_address }}:6443

--upload-certs

--pod-network-cidr 10.244.0.0/16

when: not kubeadm_admin_conf.stat.exists

register: kubeadm_init

- name: Copy admin.conf to user's kube config on first master

become: yes

shell: mkdir -p /root/.kube && cp /etc/kubernetes/admin.conf /root/.kube/config

args:

executable: /bin/bash

- name: Install Calico CNI

shell: |

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.27.2/manifests/calico.yaml --kubeconfig /etc/kubernetes/admin.conf

args:

creates: /etc/cni/net.d/10-calico.conflist

- name: Save join command

command: kubeadm token create --print-join-command

register: join_command

- name: Upload certificates and get certificate key

command: kubeadm init phase upload-certs --upload-certs

register: cert_key

- name: Generate control-plane join command

command: kubeadm token create --print-join-command --certificate-key {{ cert_key.stdout_lines[-1] }}

register: join_command_cp

- hosts: masters[1:]

become: true

tasks:

- name: Check if node already joined the cluster

stat:

path: /etc/kubernetes/kubelet.conf

register: kubelet_conf

- name: Join additional master nodes

command: "{{ hostvars[groups['masters'][0]]['join_command_cp'].stdout }}"

when: not kubelet_conf.stat.exists

- name: Copy admin.conf to user's kube config on other masters

become: yes

shell: mkdir -p /root/.kube && cp /etc/kubernetes/admin.conf /root/.kube/config

args:

executable: /bin/bash

- hosts: masters[0]

vars:

kubernetes_version: "1.35.1"

containerd_version: "1.7"

interface: "enp1s0"

vip_address: "10.10.10.10"

become: true

tasks:

- name: remove IP on first master

become: yes

shell: |

if ip addr show dev {{ interface }} | grep -q "{{ vip_address }}/24"; then

ip addr del {{ vip_address }}/24 dev {{ interface }}

fi

args:

executable: /bin/bash

- hosts: workers

become: true

tasks:

- name: Check if node already joined the cluster

stat:

path: /etc/kubernetes/kubelet.conf

register: kubelet_conf

- name: Join worker nodes

command: "{{ hostvars[groups['masters'][0]]['join_command'].stdout }}"

when: not kubelet_conf.stat.exists

- name: Create app-blue Deployment and Service manifest

hosts: masters[0]

become: true

tasks:

- name: Write app-blue.yaml

copy:

dest: /root/app-blue.yaml

content: |

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-blue

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: app-blue

template:

metadata:

labels:

app: app-blue

spec:

#affinity:

#podAntiAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# - labelSelector:

# matchExpressions:

# - key: app

# operator: In

# values:

# - app-blue

# topologyKey: "kubernetes.io/hostname"

containers:

- name: app-blue

image: openterprise/blue:latest

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 5000

---

kind: Service

apiVersion: v1

metadata:

name: svc-blue

namespace: default

spec:

selector:

app: app-blue

ports:

- name: svc-blue

protocol: TCP

port: 80

targetPort: 5000

nodePort: 30005

type: NodePort

- name: Install test app

shell: |

kubectl apply -f /root/app-blue.yaml --kubeconfig /etc/kubernetes/admin.conf